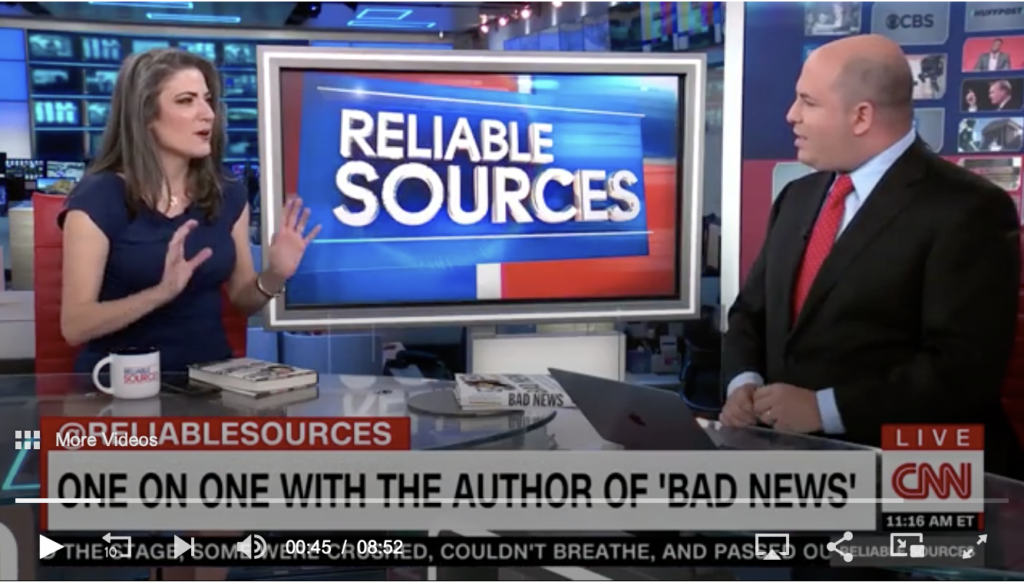

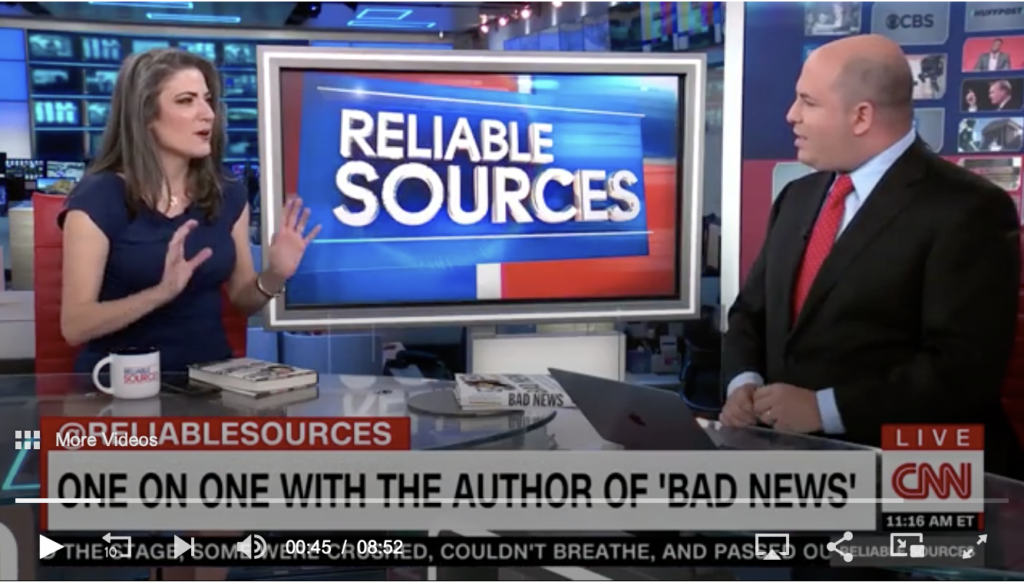

Newsweek's Batya Ungar-Sargon, author of Bad News: How Woke Media Is Undermining Democracy, speaks with CNN's "Reliable Sources" about how the media covered the Virginia gubernatorial election.

We are hiding a class divide in America," she said. "We are hiding disgusting levels of income inequality in America. We are hiding the total dispossession of the working class of all races by focusing on a very highly specialized academic language about race.

Louis Gates, writing at the NYT in 2016:

The Harvard sociologist William Julius Wilson calls the remarkable gains in black income “the most significant change” since Dr. King’s passing. When adjusted for inflation to 2014 dollars, the percentage of African-Americans making at least $75,000 more than doubled from 1970 to 2014, to 21 percent. Those making $100,000 or more nearly quadrupled, to 13 percent (in contrast, white Americans saw a less impressive increase, from 11 to 26 percent). Du Bois’s “talented 10th” has become the “prosperous 13 percent.”

But, Dr. Wilson is quick to note, the percentage of Black America with income below $15,000 declined by only four percentage points, to 22 percent.

In other words, there are really two nations within Black America. The problem of income inequality, Dr. Wilson concludes, is not between Black America and White America but between black haves and have-nots, something we don’t often discuss in public in an era dominated by a narrative of fear and failure and the claim that racism impacts 42 million people in all the same ways.

More statistics from a 2016 NYT article: "Class Is Now a Stronger Predictor of Well-Being Than Race"

Here’s a true statement: America’s historical mistreatment of blacks was uniquely evil and continues to depress the fortunes of African-Americans. Here’s another true statement: Class has become a stronger predictor of wellbeing than race. . . .

Social class “is the single factor with the most influence on how ready" a child is to learn when they start kindergarten, according to the liberal Economic Policy Institute. Low-income white kids score considerably lower in reading and math skills than middle-class white kids. Add race to the mix, and class still remains the Great Divide when it comes to school readiness.

America has hurt blacks grievously; their progress remains dismally slow. But working-class whites are in free fall. The educational achievement gap is now almost two times higher between lower and higher income students than it is between black and white students. That’s a big change from the past: In 1970, the race gap in achievement was more than one and a half times higher than the class gap. Since then, says Stanford University’s Sean Reardon, the class gap has grown by 30 to 40 percent, and become the most potent predictor of school success.

While single parent families are far more common among African-Americans than whites, less educated whites — who also tend to be lower income — are seeing an unprecedented dissolution of their families. Seventy percent of whites without a high school degree were part of an intact nuclear family in 1972; that number plummeted to 36 percent by 2008. (The comparable numbers for blacks were 54 percent and 21 percent.)

One more set of statistics regarding income of blacks (from Wikipedia).

27.3% of black households earned an income between $25,000 and $50,000, 15.2% earned between $50,000 and $75,000, 7.6% earned between $75,000 and $100,000, and 9.4% earned more than $100,000.